Empowering event organizers with pricing clarity

Team composition

Growth Specialist, Project Manager, and Marketer.

The Challenge

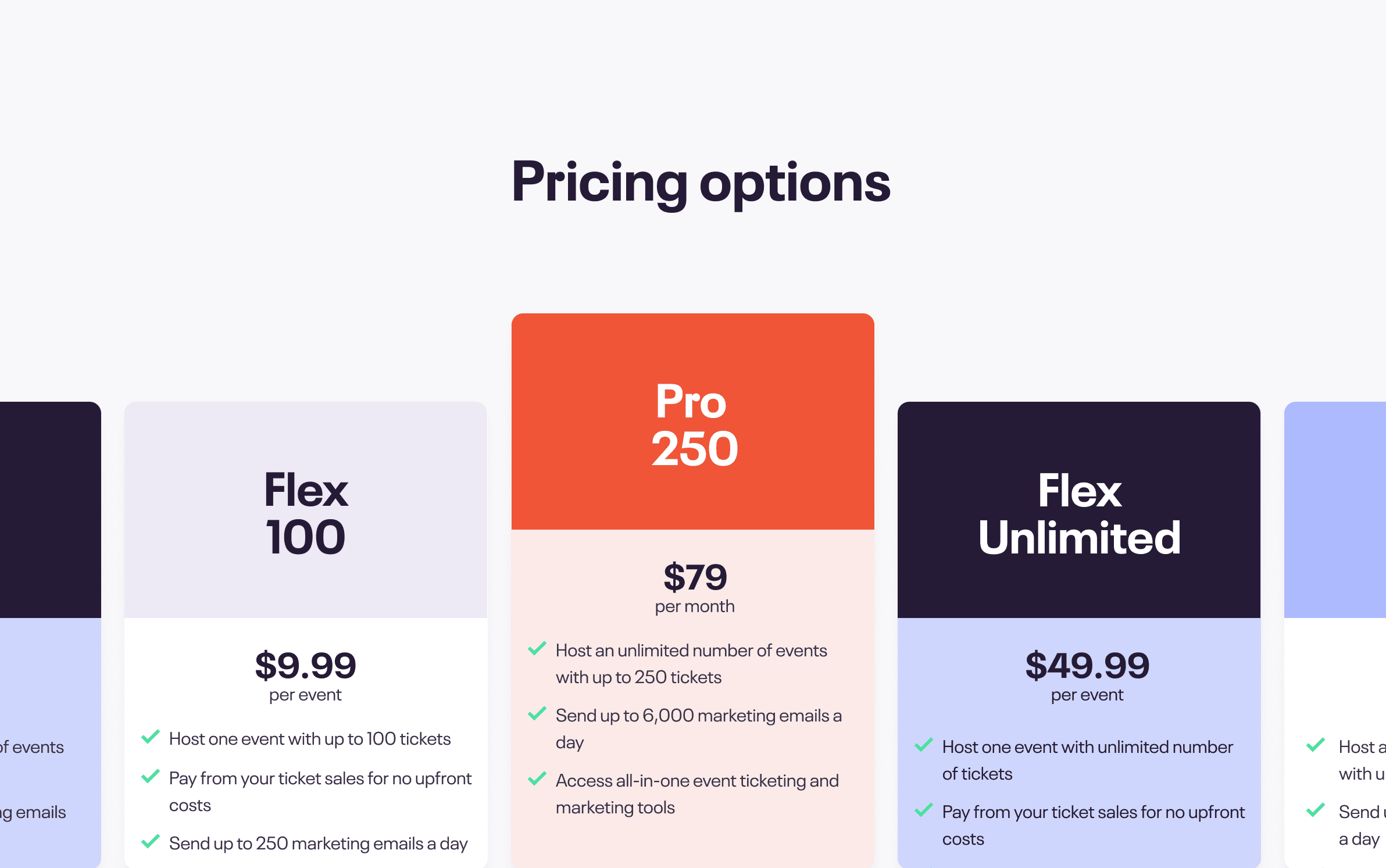

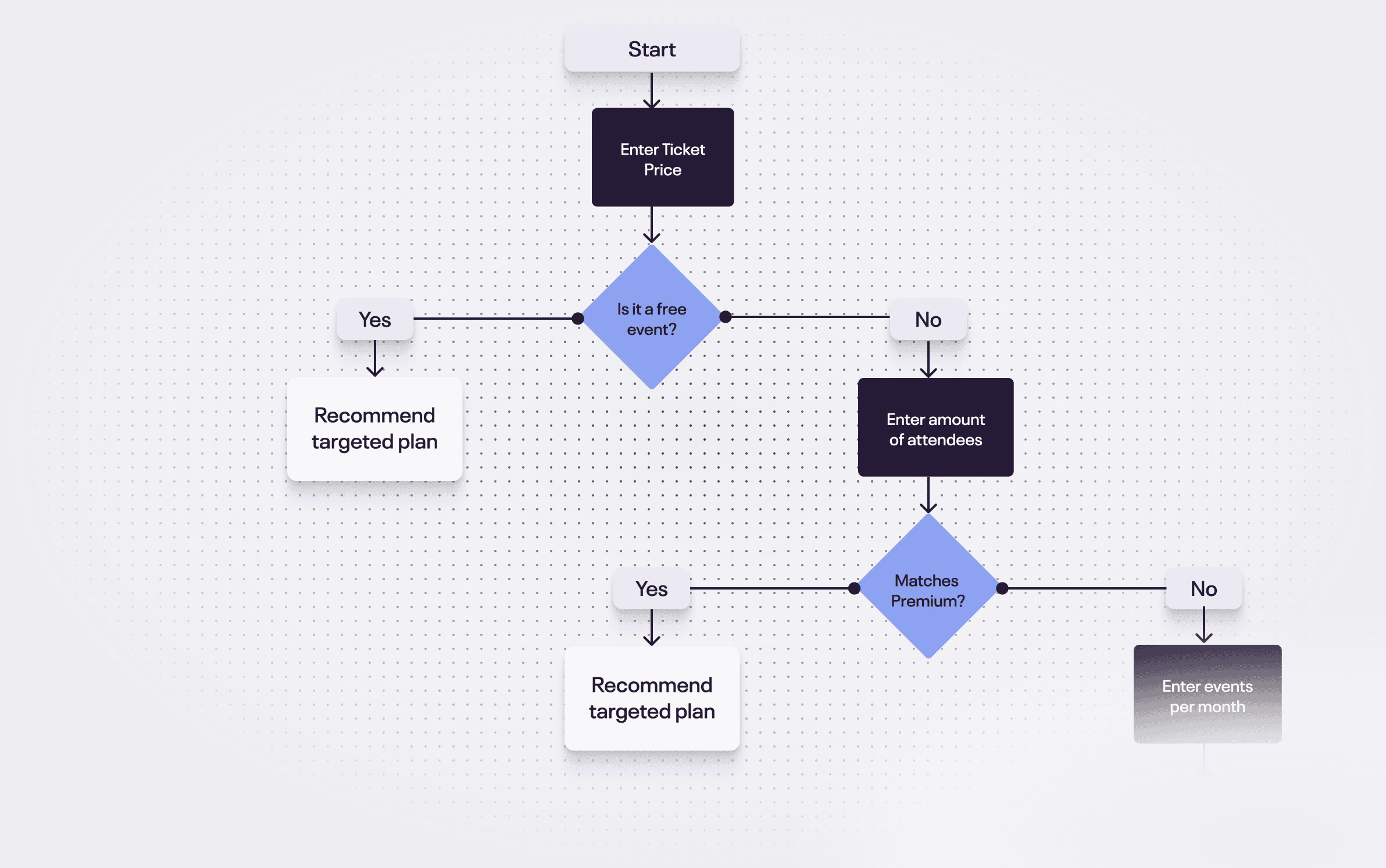

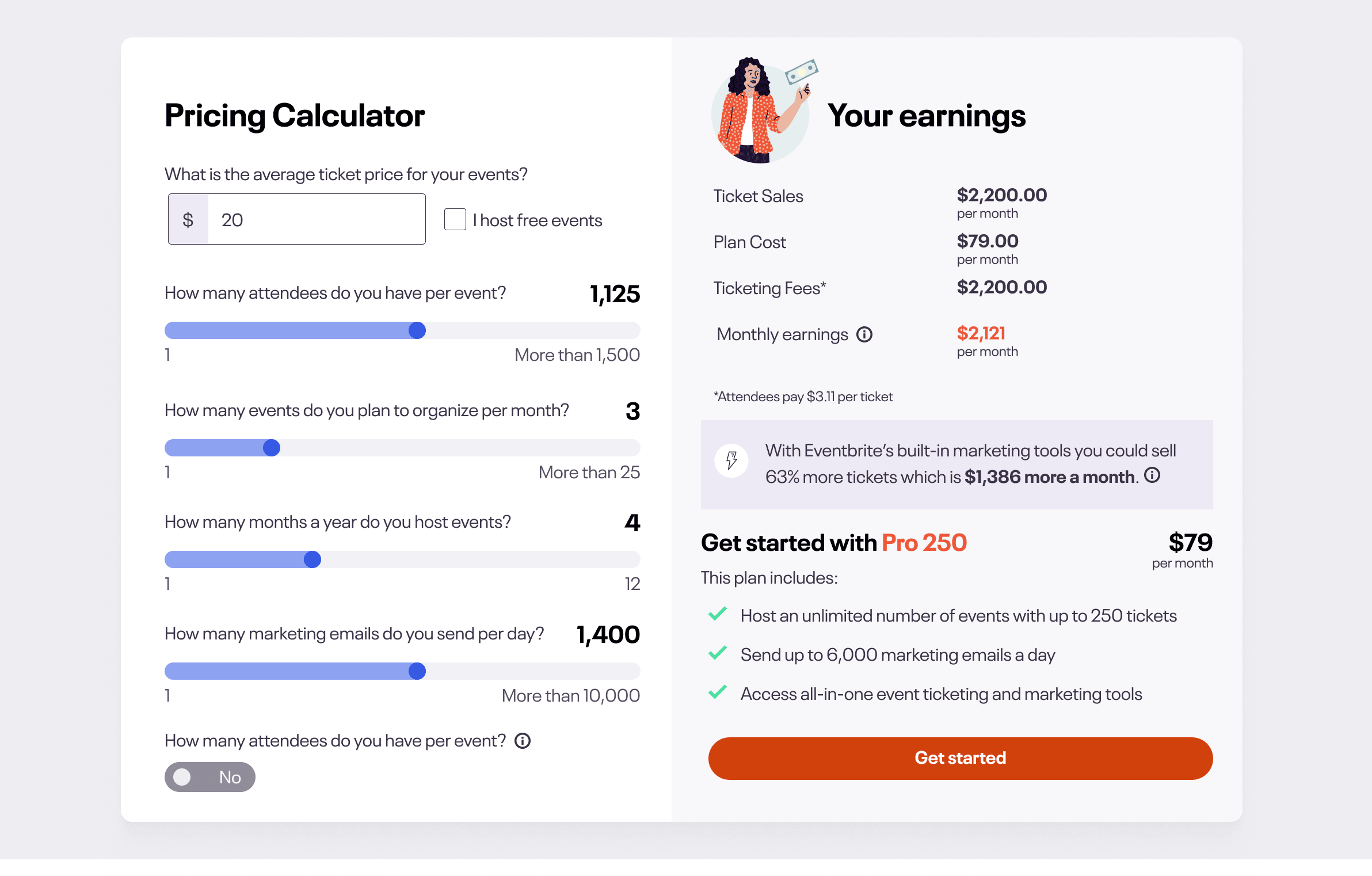

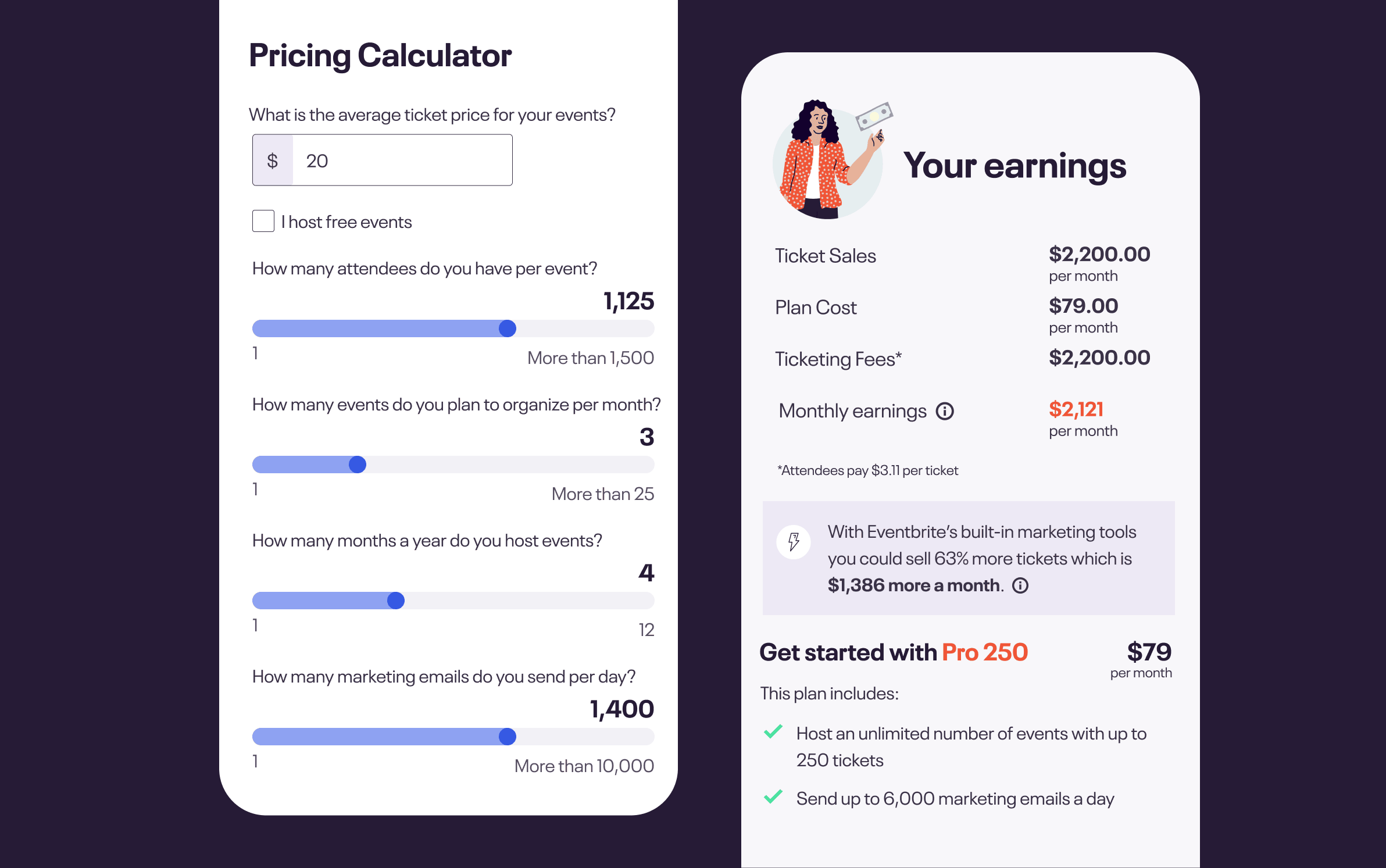

Eventbrite launched a new pricing model with expanded services (email marketing, ads, premium support), but event organizers couldn't understand which plan fit their business. Fee complexity and unclear value propositions caused sign-up drop-off, blocking revenue growth during a critical launch period.

Core problems

Decision paralysis when comparing plans

Hidden fees eroded trust

Unclear ROI for new premium features

The opportunity

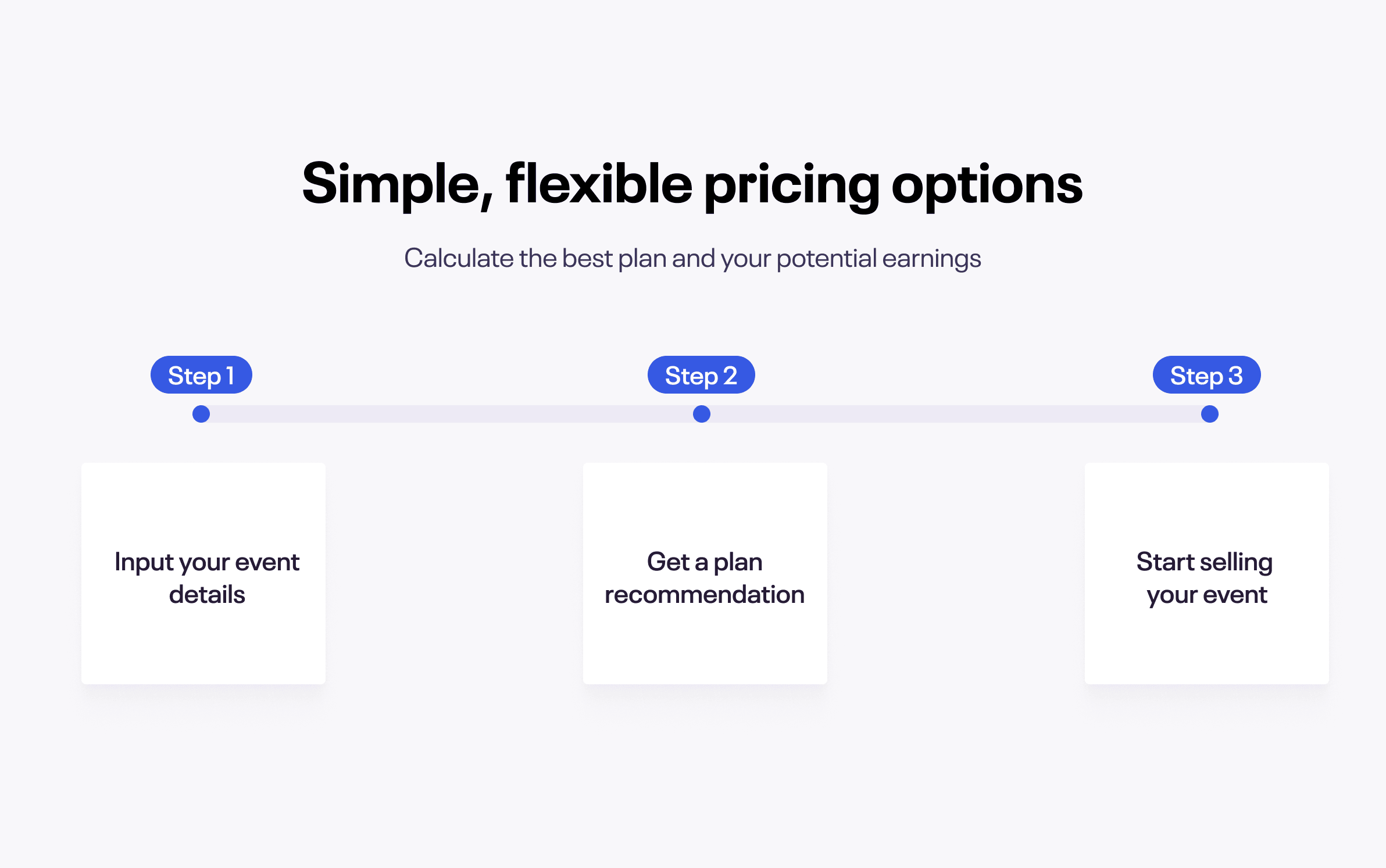

Help organizers quickly find the right plan while transparently showing Eventbrite's competitive value.